Summarize RSS Feeds with Local LLMs: Ollama, Open-WebUI, and Matcha Guide

Fear of missing out (FOMO)

Striking a balance between staying updated and avoiding information overload is essential, especially in the fast-moving IT industry. The urge to constantly monitor news can quickly drain your productivity and impact your well-being. This article shows how you can leverage large language models (LLMs) to automatically summarize RSS feeds, helping you keep up with key developments efficiently — so you can focus on what truly matters.

I am pretty sure there are already services that can summarize your RSS feeds for a fee, but fortunately, you can run LLMs locally to save money and gain experience with the tooling at the same time.

Running LLM locally

There are two popular tools to run large language models (LLMs) locally:

Adjust resources in docker environment

If you run these tools in a Docker container, you should increase the CPU and memory limits in your Docker environment. LLMs are resource-intensive, requiring significant memory and CPU power. By default, Colima allocates 2 CPUs and 2GB of memory. To adjust these settings:

colima start --cpu 6 --memory 10

Current resource allocation can be checked:

colima list

Local-AI can be used as an all-in-one Docker image with a pre-configured set of models (text-to-speech, speech-to-text, image generation, etc) and it has a nice UI. However, after installing it, chat completion with deepseek-r1 did not work for me. I could not find a solution in the official repository issues, so I decided to use Ollama instead.

Ollama & open-webui

Ollama also can run in a docker container but it doesn’t come with UI. Fortunately, Open-WebUI provides a user-friendly web interface for interacting with your locally running LLM models. It makes it easy to chat with models, manage conversations, and access advanced features without needing to use the command line.

I ask github copilot to create a docker-compose file for me and with a few tweaks I got a working docker-compose.yaml:

services:

ollama:

image: ollama/ollama:latest

container_name: ollama

ports:

- "11434:11434"

volumes:

- ollama_data:/root/.ollama

environment:

- OLLAMA_MODELS=/root/.ollama/models

restart: unless-stopped

open-webui:

image: ghcr.io/open-webui/open-webui:main

container_name: open-webui

ports:

- "3000:8080"

volumes:

- ollama_data:/app/backend/data

environment:

- OLLAMA_API_BASE_URL=http://ollama:11434

- WEBUI_AUTH=False

depends_on:

- ollama

restart: unless-stopped

volumes:

ollama_data:

Ollama supports many models. See the full list here. The official Ollama Docker container does not include any models by default. To install a model, connect to the running container and pull it manually (an example with granite by IBM):

docker exec -it ollama ollama pull granite3.3:latest

The attached volume keeps your models, so you do not need to reinstall them after restarting. Verify that ollama is up and running:

curl http://localhost:11434/

Open-webui will be available under http://localhost:3000/:

One of the key advantages of Ollama is its REST API, which is compatible with the OpenAI API. This compatibility allows you to seamlessly integrate Ollama with applications and tools designed for OpenAI, making it a flexible choice for local LLM deployments.

Summmarize RSS feeds

Matcha

When I was looking for a free service to summarize RSS feeds, I came accross - Matcha. It’s an app written in golang which makes it quite easy to fork and extend if you need.

This is how the author of the app describes it:

Matcha is a daily digest generator for your RSS feeds and interested topics/keywords. By using any markdown file viewer (such as Obsidian) or directly from terminal (-t option), you can read your RSS articles whenever you want at your pace, thus avoiding FOMO throughout the day.

Once you’ve donwloaded a corressponding binary from the release page, you can run this single binary to generate the default config.yml file:

markdown_dir_path:

feeds:

- http://hnrss.org/best 10

- https://waitbutwhy.com/feed

- http://tonsky.me/blog/atom.xml

- http://www.joelonsoftware.com/rss.xml

- https://www.youtube.com/feeds/videos.xml?channel_id=UCHnyfMqiRRG1u-2MsSQLbXA

google_news_keywords: George Hotz,ChatGPT,Copenhagen

instapaper: true

weather_latitude: 37.77

weather_longitude: 122.41

terminal_mode: false

opml_file_path:

markdown_file_prefix:

markdown_file_suffix:

reading_time: false

sunrise_sunset: false

openai_api_key:

openai_base_url:

openai_model:

summary_feeds:

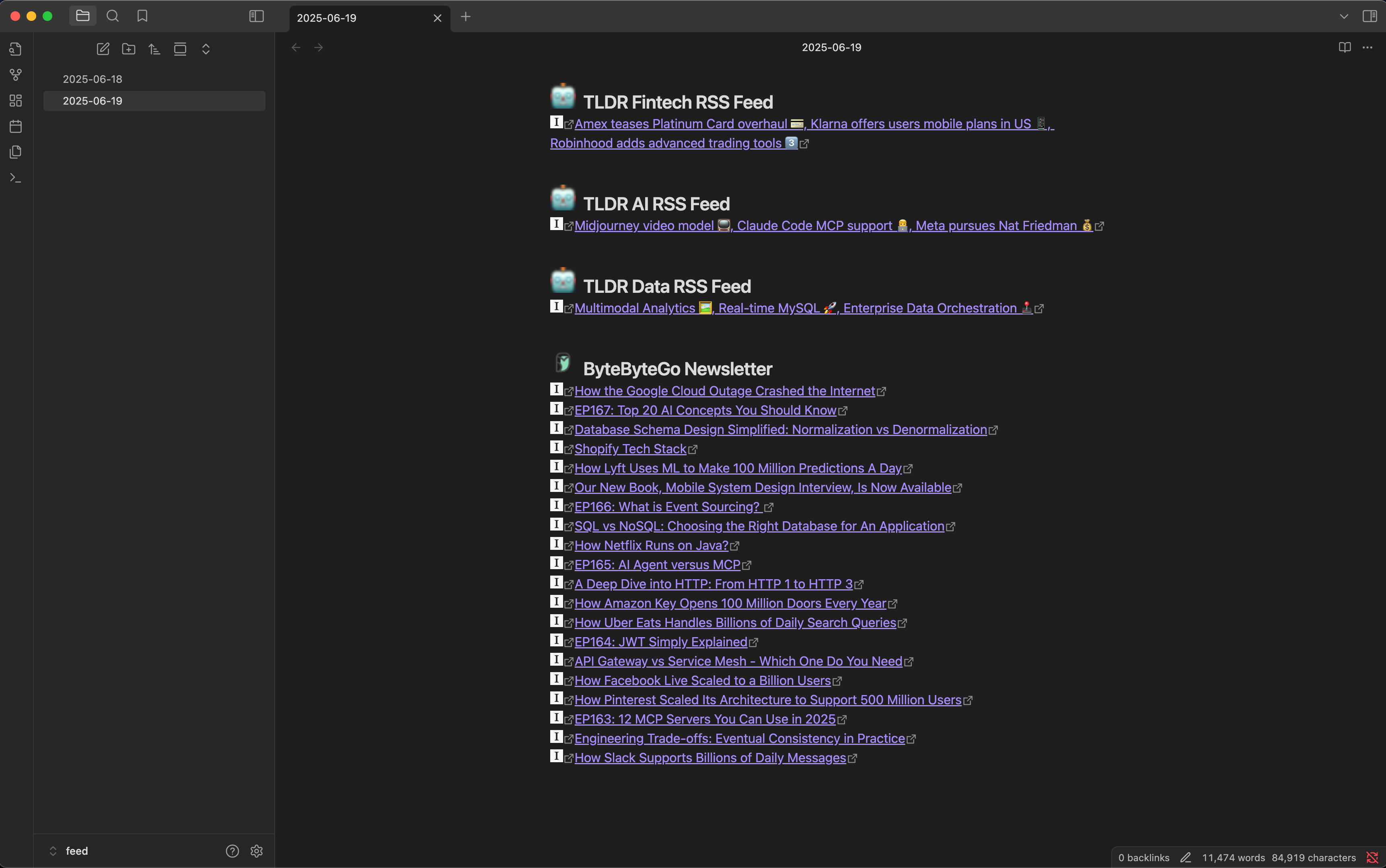

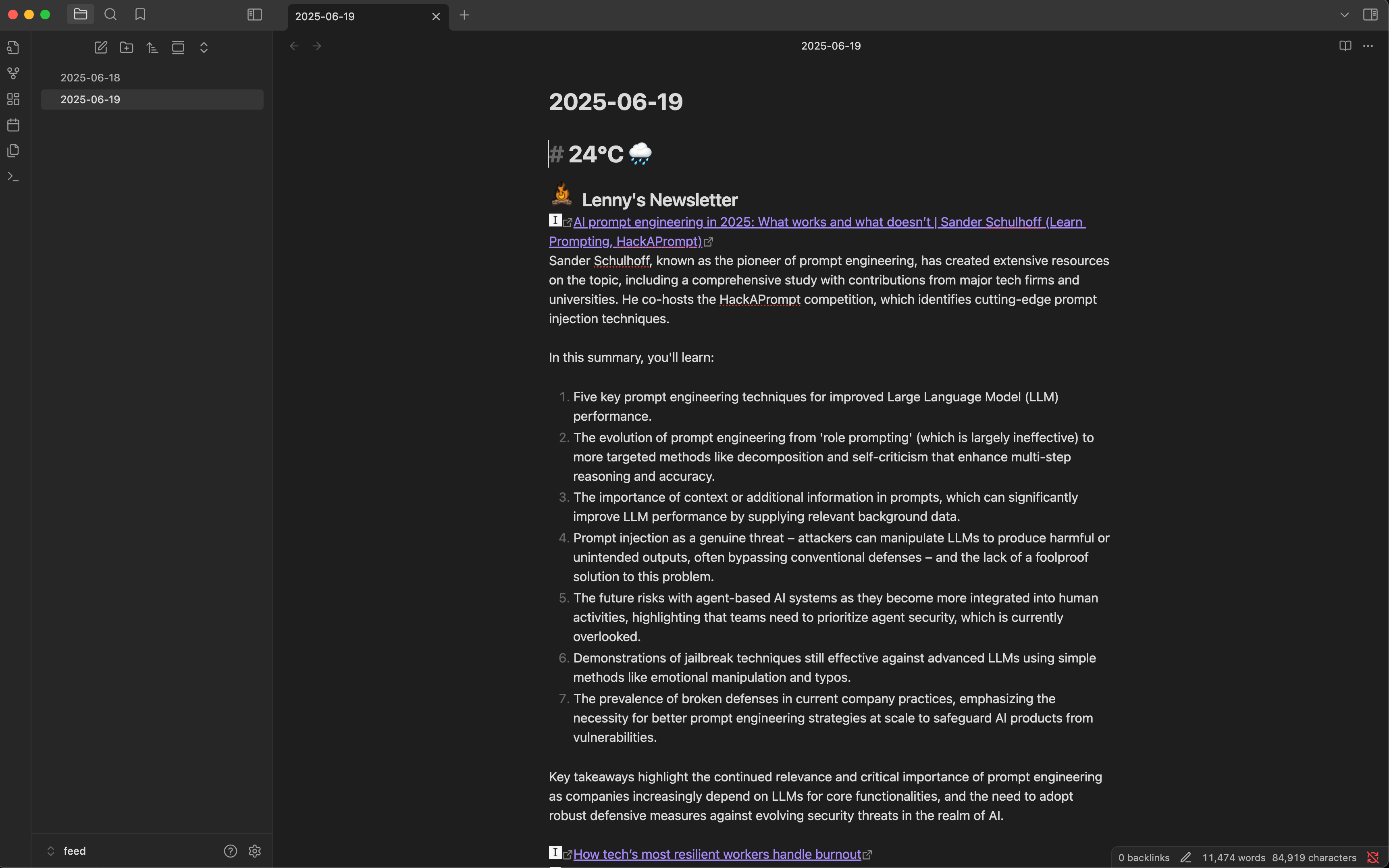

You can specify your favorite RSS feeds and Google News keywords of interest, then run the Matcha binary again. It will generate a well-formatted markdown file that you can open with any markdown reader. The author recommends Obsidian, which is popular for its local-first approach and support for plain .md files. Personally, I use Notion for note-taking, but I have considered Obsidian in the past. While Obsidian’s paid subscription for cross-device sync wasn’t appealing to me at the time, its use of standard markdown files makes it an excellent choice for reading and organizing these summaries.

The most interesting part is the summary_feeds section, where you can specify which feeds you want to have summarized by your LLM. Now, let’s bring everything together and configure Matcha to use your locally running Ollama model. Here’s the relevant part of the config file:

openai_api_key:

openai_base_url: http://localhost:11434/v1

openai_model: granite3.3:latest

summary_feeds:

- https://www.lennysnewsletter.com/feed

- https://newsletter.systemdesign.one/feed

Run the binary again. The output includes links to original articles and summary for each of them:

With this setup, you can now run Matcha daily to automatically generate a digest of your favorite RSS feeds. The summaries are created using your local LLM, ensuring privacy and cost savings. This workflow helps you stay informed without being overwhelmed by information overload. Get yourself some coffee ☕️ and enjoy!

There is room for improvement:

- Specify a folder for Matcha to output its markdown file to have automatic synchronization across multiple devices (e.g. dropbox or goolge drive).

- To avoid excessive costs (especially when using OpenAI), the author limits each article’s text to 5,000 characters before submitting it for summarization—so not the entire article is used.

- Automate daily Matcha runs to generate a daily digest of your RSS feeds and their summaries.

Enjoy Reading This Article?

Here are some more articles you might like to read next: