LLM Performance on Mac: Native vs Docker Ollama Benchmark

LLM runs slow in Docker on MacOS

I have started generating a daily RSS digest using Matcha and summarizing it with Ollama. You can find more details in my previous article. However, when there are many articles to summarize, the process becomes quite slow. For example, once I had to wait almost one hour to get the daily digest. This made me think about how to make it faster. My first guess was that the GPU is not being used at all. I found evidence for this in the Ollama GitHub repository:

When you run Ollama as a native Mac application on M1 (or newer) hardware, we run the LLM on the GPU.

Docker Desktop on Mac, does NOT expose the Apple GPU to the container runtime, it only exposes an ARM CPU (or virtual x86 CPU via Rosetta emulation) so when you run Ollama inside that container, it is running purely on CPU, not utilizing your GPU hardware.

On PC’s NVIDIA and AMD have support for GPU pass-through into containers, so it is possible for ollama in a container to access the GPU, but this is not possible on Apple hardware.

So let’s install ollama natively on MacOS and run a benchmark to compare the results.

Run LLM natively on MacOS

- Install ollama

brew install ollama - Start ollama

ollama serve - Make sure ollama is up and running

curl http://localhost:11434 - Pull a model (e.g. granite3.3 by IBM)

ollama pull granite3.3:latest - Run a model

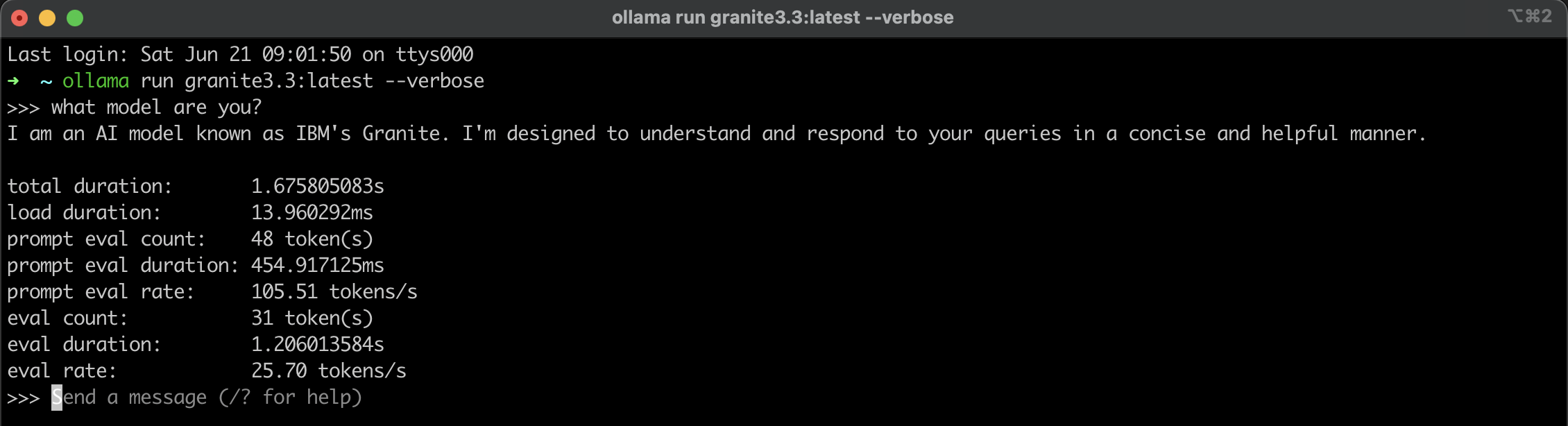

ollama run granite3.3:latest - Adding a flag

--verbosegives you helpful infromation about the performanceollama run granite3.3:latest --verbose

Run a benchmark

We live in remarkable times. Whenever I face a challenge, I first search online, as there is a high chance that someone else has already encountered and solved a similar problem. I was curious if there was a benchmarking tool for LLMs that I could use, and I discovered llm.aidatatools.com.

Install:

pip install llm-benchmark

Setup:

Total memory size : 32.00 GB

cpu_info: Apple M1 Pro

gpu_info: Apple M1 Pro

os_version: macOS 14.6 (23G80)

ollama_version: 0.9.1

It can automatically pick and pull models based on RAM available on your machine but you also can create a config file with models you’d like to test:

file_name: "custombenchmarkmodels.yml"

version: 2.0.custom

models:

- model: "granite3.3:8b"

- model: "phi4:14b"

- model: "deepseek-r1:14b"

Now you can run the benchmark with the models of your choice:

llm_benchmark run --custombenchmark=path/to/custombenchmarkmodels.yml

Here is a sample of what a benchmark looks like:

model_name = granite3.3:8b

prompt = Summarize the key differences between classical and operant conditioning in psychology.

eval rate: 24.49 tokens/s

prompt = Translate the following English paragraph into Chinese and elaborate more -> Artificial intelligence is transforming various industries by enhancing efficiency and enabling new capabilities.

eval rate: 24.93 tokens/s

prompt = What are the main causes of the American Civil War?

eval rate: 24.00 tokens/s

prompt = How does photosynthesis contribute to the carbon cycle?

eval rate: 24.14 tokens/s

prompt = Develop a python function that solves the following problem, sudoku game.

eval rate: 23.93 tokens/s

--------------------

Average of eval rate: 24.298 tokens/s

During the native run, GPU utilization was consistently close to 100%, confirming that Ollama was able to leverage the Apple M1 Pro’s GPU for accelerated inference.

The same benchmark was performed with Ollama running inside a Docker container, where GPU usage was not detected. As expected, the evaluation rates were significantly lower, and the models relied solely on CPU resources, resulting in much slower inference times.

Benchmark results

The results of benchmarking for ollama running natively and in a docker container:

| Model | Avg. Eval Rate (tokens/s) | GPU Utilization | Notes |

|---|---|---|---|

| granite3.3:8b | 24.3 | ~100% | Native, Apple M1 Pro |

| phi4:14b | 14.5 | ~100% | Native, Apple M1 Pro |

| deepseek-r1:14b | 13.7 | ~100% | Native, Apple M1 Pro |

| granite3.3:8b | 4.3 | 0% | Docker, Apple M1 Pro |

| phi4:14b | 2.3 | 0% | Docker, Apple M1 Pro |

| deepseek-r1:14b | 2.4 | 0% | Docker, Apple M1 Pro |

It’s quite obvious and the benchmark shows that running Ollama natively on a Mac with Apple Silicon delivers up to 5–6 times faster LLM inference speeds compared to Docker, thanks to full GPU utilization, while Docker runs are limited to CPU and are significantly slower.

Enjoy Reading This Article?

Here are some more articles you might like to read next: