-

Run Hugging Face LLMs Free on Google Colab

Run large language models (LLMs) from Hugging Face Hub for free using Google Colab's T4 GPUs. It covers setup, authentication, and explains the key differences between pipeline and InferenceClient for local vs remote model execution.

-

LLM Performance on Mac: Native vs Docker Ollama Benchmark

Discover how to speed up large language model (LLM) inference on Mac by running Ollama natively to leverage Apple Silicon GPU acceleration. This guide compares native and Docker performance, provides step-by-step setup instructions, and shares real benchmark results to help you optimize your AI workflows on macOS.

-

Summarize RSS Feeds with Local LLMs: Ollama, Open-WebUI, and Matcha Guide

Learn how to automatically summarize RSS feeds using local large language models (LLMs) with Ollama, Open-WebUI, and Matcha. This step-by-step guide covers running LLMs in Docker, integrating with OpenAI-compatible APIs, and generating daily markdown digests for efficient news consumption—no subscription required.

-

How to install and use docker compose along with colima on MacOS

A step-by-step guide to installing and using Docker Compose with Colima as a Docker Desktop alternative on macOS.

-

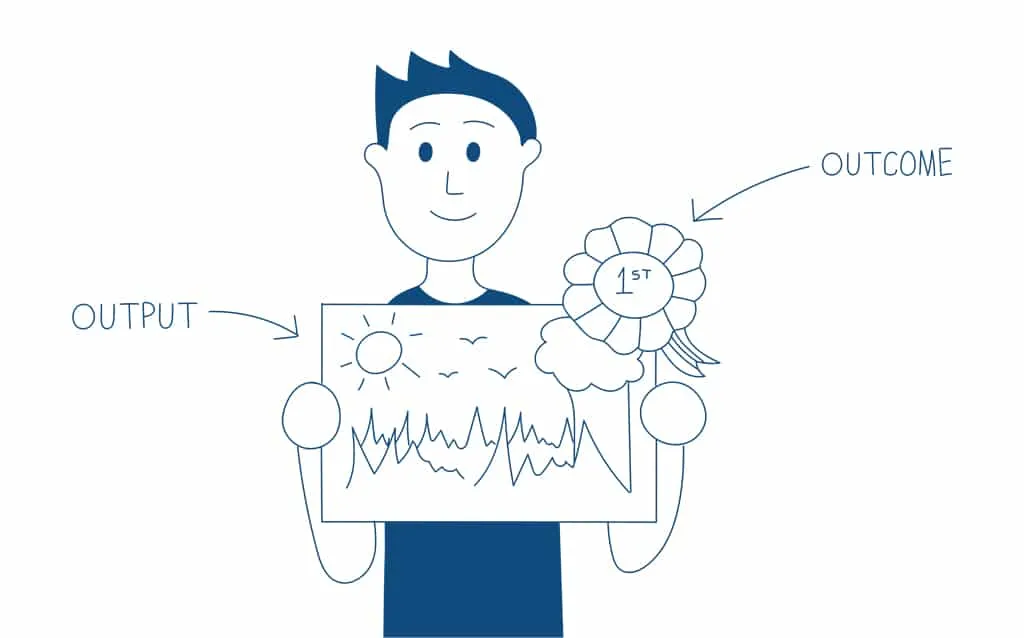

Outcome vs Output

Key difference between outputs and outcomes in product development and how understanding this distinction can help you focus on delivering real impact, not just deliverables.